writing tools

and the state of AI in 2024

I haven’t written a product review in this column before, but last month I took up a Black Friday offer on a writing/grammar tool, so I thought I’d share my experiences so far and also talk about the state of generative AI these days.

For context, I don’t use any writing tools while writing first drafts. I even turn off spellchecking, because I find the slightest distraction disturbs my concentration. In any case, I happen to be very good at spelling and grammar. When I turn spelling/grammar checking back on, all it tends to find are a handful of typos and the odd problem such as the pluralisation of subject and verb not agreeing, usually because I edited a sentence after first writing it.

After decades of sticking to free open source software on principle, all the tools I use nowadays cost money (making honest reviews much more important). I use Scrivener for all my writing, which is a truly excellent package. It doesn’t have a built-in spelling and grammar checker, which suits me for new writing. It can check spelling using the system dictionary, which is fine for a pass through to pick up typos.

I use a Mac app which provides the (Shorter) Oxford English Dictionary and Chambers Thesaurus, and I do sometimes use these while writing, as well as during editing. I’ve always been a fan of the thesaurus as a way of finding the most apt and expressive word. I used to waste a lot of time, while writing, flipping through my thesaurus chasing ideas.

I sometimes export my work from Scrivener into MS Word to see what its spelling and grammar checker has to say. The version I have (Word for Mac) underlines spelling queries in red and grammar in blue. Over 90% of its grammar suggestions are disagreeing with my use of commas or hyphens (for example it thinks house-husband should be smooshed together into househusband); most of its comma suggestions could be appropriate for non-fiction writing, but not for fiction where an aim is to establish a distinctive narrative voice. Most of its other suggestions are to make the language more concise, for example to replace “once in a while” with the blander “occasionally”. Again, it’s alright for making homogenous, businesslike writing but rubbish for fiction. Even so, in the later stages of polishing, I find looking through what it highlights a useful focus for my attention – even if, 9 times out of 10, my response is “no, that’s better as it is”, once in a while it can throw up something that I can improve.

It was for this purpose that I recently bought a subscription to ProWritingAid. I’d looked at it a year or two ago (and also the similar product Grammarly) and remained unconvinced, but the combination of a big discount and its new integration into Scrivener persuaded me to give it a try. For a Scrivener user, the big advantage is that it highlights grammar issues by underlining them in your document, like MS Word. And like Word, I found most of its suggestions useless. Again, most of them seem aimed at writing clear but bland non-fiction, for example it will highlight every modifier for deletion (for example if someone is “quite unaware” of something and “a bit worried”, it suggests you should simply state that they are unaware and worried). With the “vivid verbs” checkbox enabled, it highlights every single occurrence of “was” in a text – thousands and thousands of them, in a novel – to ask if they couldn’t be more vivid. But again, even if you ignore 99% of these (and you should!) I still think it’s helpful to have attention drawn to these spots in the text, to consider them for improvement in the final polishing phase. In fiction writing in general, things like vivid verbs are good, and things like over-long sentences are bad; and it’s worth giving them some thought even if the outcome is a conscious decision to leave them as you wrote them, because occasionally you will think of something better, just like you do when using a thesaurus. These conscious decisions about every sentence are what’s hard about editing a hundred thousand word text: after a few minutes, the eye starts to slip too easily over sentences that you remember writing, and you know what you meant. The difficult part of writing is feeling how a sentence will land with a reader, and these tools provide help as a kind of very dim but very persistent reader.

To this extent, ProWritingAid doesn’t add much on top of the kinds of thing MS Word offers, except for the advantage of not having to fire up the annoying bloatware that is Word. (It does have its own irritations: it’s buggy, for example it often highlights two sentences separated by a full stop and a space, with a spurious warning that it’s one long sentence.) It does have dozens of other tools, such as the ability to detect repeated words and phrases which I liked, and dozens of reports it can generate on writing style, which I’ve hardly looked at yet (so this isn’t a proper product review). But since the last time I looked at it, it has added some generative AI or “genAI” features.

Last year, in all about AI and AI for writers, I discussed the new genAI chatbots, noting that they’re not really AI at all, and are currently being heavily subsidised by investors, and I talked about prompting these genAI tools to come up with character or situation ideas. The art of genAI has moved on over the last year. Not only is “prompt engineering” becoming ever more sophisticated so as to get useful answers out of genAIs, increasingly the “hallucination problem” (where genAI will give a factually wrong response) is being mitigated by a number of techniques: combining multiple models together, and limiting the answers it can give, for example by selective detuning of the model, or combining a genAI model with a more deterministic, factual data source. I don’t believe the hallucination problem is going to be solved anytime soon, but the mitigations are improving; in short, genAI tools are getting rapidly better at the moment. I have seen this lots of times in the past, where a technology improves fast as low-hanging fruit is picked off, then progress stalls, funding dries up, and it all goes quiet. In my experience of hearing about AI research over the last 40 years, these plateaux usually last about 10 years. I reckon we will probably reach that point with genAI in another year or so.

Anyway, to get back on-topic, tools like ProWritingAid apply genAI to the sentence level rather than at the idea-generating stage. ProWritingAid has a “rephrase” tool which offers suggestions to modify a highlighted sentence in a variety of ways, for example to make it more formal, more emotional, more sensory and several more. (Apple’s new Writing Tools feature is very similar, but currently only has four, very business-oriented options: rewrite, friendly, professional or concise.) So for example, if I highlight a line of dialogue where one character points out that a bike chain looks loose, the sensory suggestions include adding “his voice a hum against the background of the busy workshop” and “she mumbled, pointing a finger at the almost severed link”; emotional gives “a cold dread tightened my throat as I stammered…”; formal “I’ve noticed your chain appears somewhat loose” and so on.

Meanwhile, ProWritingAid’s “sparks” tool can rewrite whole paragraphs in a choice of styles. For example, asking it to add sensory detail produced this:

Your chain looks a bit loose. As I glance at your bicycle, I notice the chain hanging precariously, swaying in the gentle breeze. I can hear the faint clinking sound as the metal links brush against each other. The scent of oil lingers in the air, reminding me of the recent maintenance work. I reach out and touch the chain, feeling its slight slackness under my fingertips.

For a fiction writer, all this is close to useless; I would never in a million years describe someone’s voice as a hum or a bike chain as clinking. So far, I’ve never once accepted one of the tool’s suggestions. And yet, just now and then, when I’m having trouble getting phrasing right, having access to an endless supply of suggestions can get me to the point where one of them sparks a new idea of my own that helps me – for example the “scent of oil” might prompt me to think of what other scents are in the air and use one of those, out of my own imagination. So once again, I find it’s not the suggestions of these tools that are directly useful, it’s the way it brings focus onto specific words and helps me think about them for myself. Other people might value different aspects of the tool, but for me, it’s much the same as a sort of very fast thesaurus. What I’ve always liked about using a thesaurus while writing is serendipity, coming across a good word that I didn’t immediately associate with the concept I’m trying to articulate, but which creates a new and fresh association that I can use.

I must mention that there are some big ethical questions over the use of genAI that I didn’t know about when I wrote last year’s columns. It turns out that many of the companies developing genAI models have stolen very large quantities of text without consulting the copyright holders. ProWritingAid doesn’t say where its training text comes from, but they do say they won’t use your text to improve their service; this is a differentiator with Grammarly, who will use your text to improve their service (meaning they may incorporate it into their models), and only promise not to share it with their partners.

As I wrote a few weeks ago in queer querying, I’m looking for a literary agent at the moment. (I’ve now written to 10 agents, and have had 4 rejections back and 2 who didn’t reply at all.) In seeking LGBT-positive agents, I’ve looked at a huge number of agents’ web pages, and I’ve noticed that some of them now say they don’t accept works made with AI tools. I suspect this is generally less to do with ethics and more for a practical reason: anything genAI suggests could turn out to have come verbatim from one of its training texts, and trigger someone’s plagiarism-detection tool. As genAI gets ever more sophisticated, there is a classic arms race going on with anti-plagiarism tools which are getting ever better at spotting AI-generated content. In today’s world, every writer should know this.

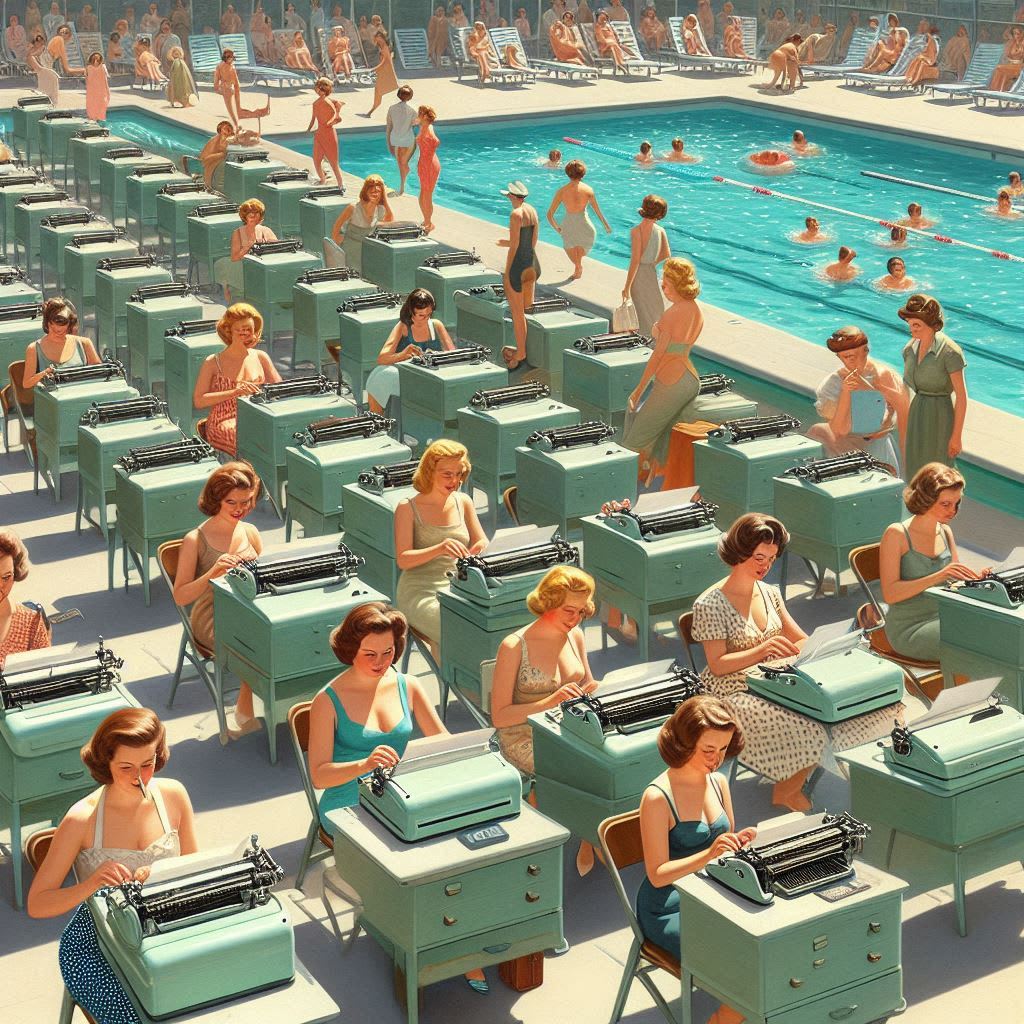

We all use word-processors nowadays because they save effort on retyping and proofreading, even though this has largely eliminated the professions of typists and proofreaders. Automation alone isn’t enough to make a tool unethical: automation is inevitable. What’s crucial when thinking about genAI tools is to understand what they should automate. Using them to automate writing itself is akin to plagiarism, and unethical (as well as producing spectacularly crappy results, currently). Following a trail of associations through a thesaurus is time-consuming, and genAI suggestion tools speed this up; using suggestions to spark your own creativity isn’t unethical and just speeds up a relatively tedious part of creative writing. Of course, when genAI stops being subsidised and gets more expensive, it may not be worth it any more, but at the moment it’s interesting to play with: that’s my current position.